Waiting State

TwitterRadio is a physical system which looks for tweets with #twitterradio, analyzes the emotion of the tweet, and plays a song with light to correspond with the returned emotion.

Off State

Background

In the past five years, social media has become a larger and larger part of people's day to day lives. Today, many people post personal information on the Internet with hopes of receiving feedback like likes or shares. I wanted to create a new way to give real time feedback to social media users. In particular, I am fascinated by the ways in which we interact with social media affects our emotions. I decided to perform sentiment analysis on Twitter posts. (I used Twitter because it is one of the main social media platforms active users post to regularly.) In return, the output – light and sound – would correspond to the returned emotion. From this, the idea of TwitterRadio came to life.

Fabrication Process

Inspired by the name of my project, TwitterRadio, I fabricated the enclosure to look like an old-fashioned radio. In order to make the enclosure further appear like a real radio, I play music from a Bluetooth speaker hidden inside the enclosure. When the radio is not in use, I wanted the radio to still appear 'alive.' While TwitterRadio waits for a tweet, neopixels light up in an active rainbow pattern. I chose a rainbow pattern because I did not want the waiting state to correlate to one of the returned emotions. Rather with this visual output, the radio appears as though its cycling through possible emotions to display. To learn more about the implementation process, please visit my in progress blog.

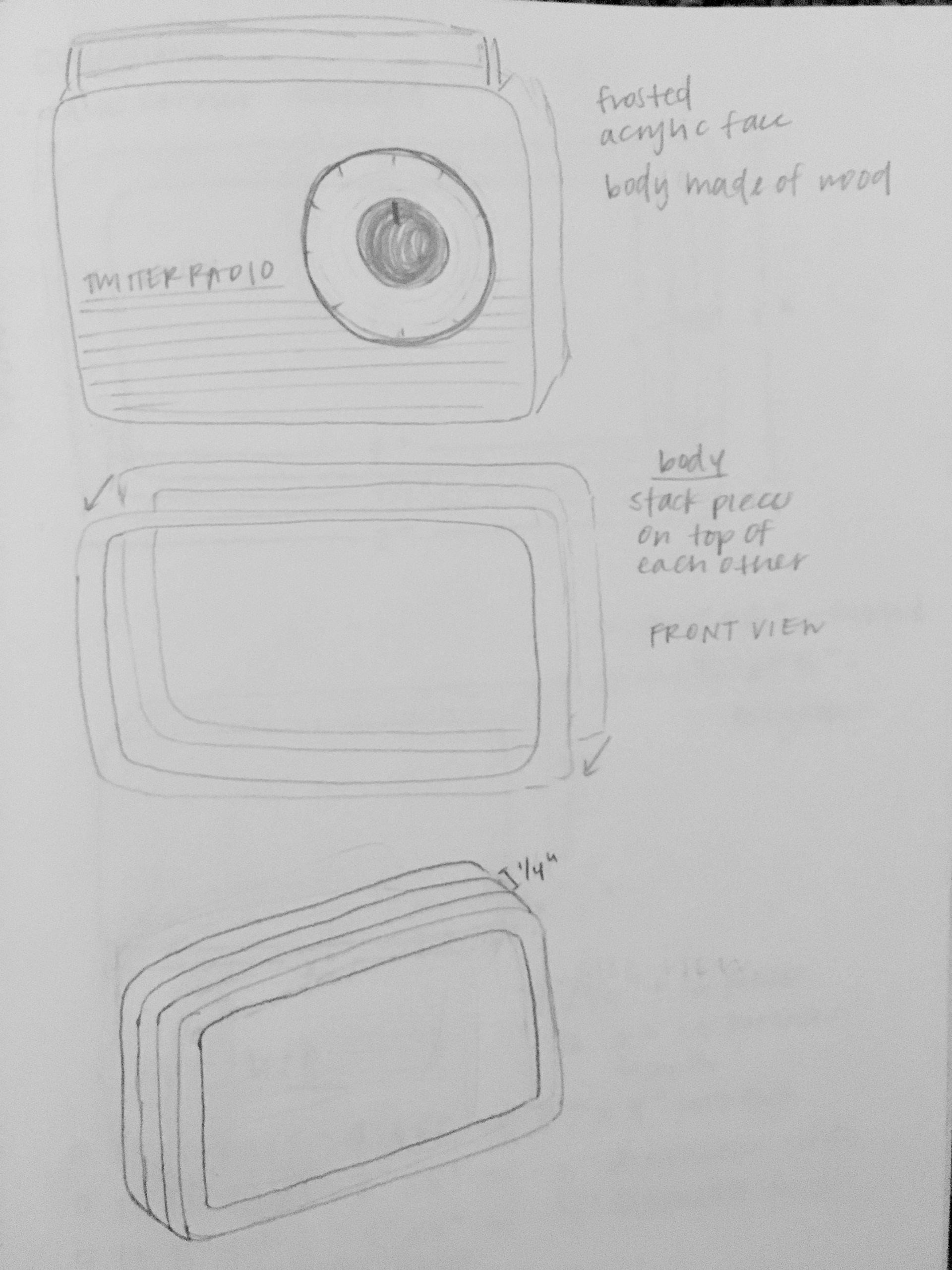

Enclosure sketch

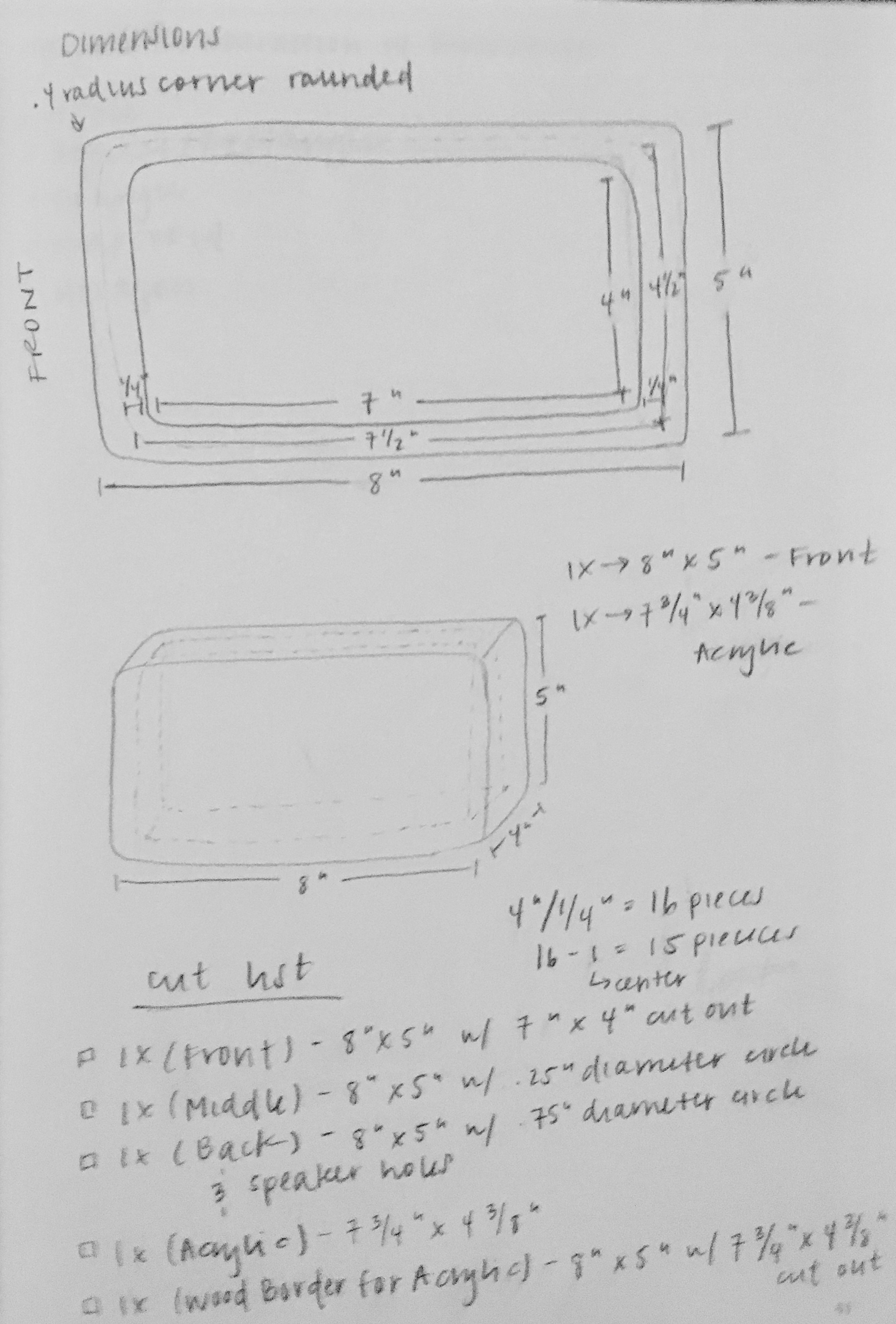

Dimensions

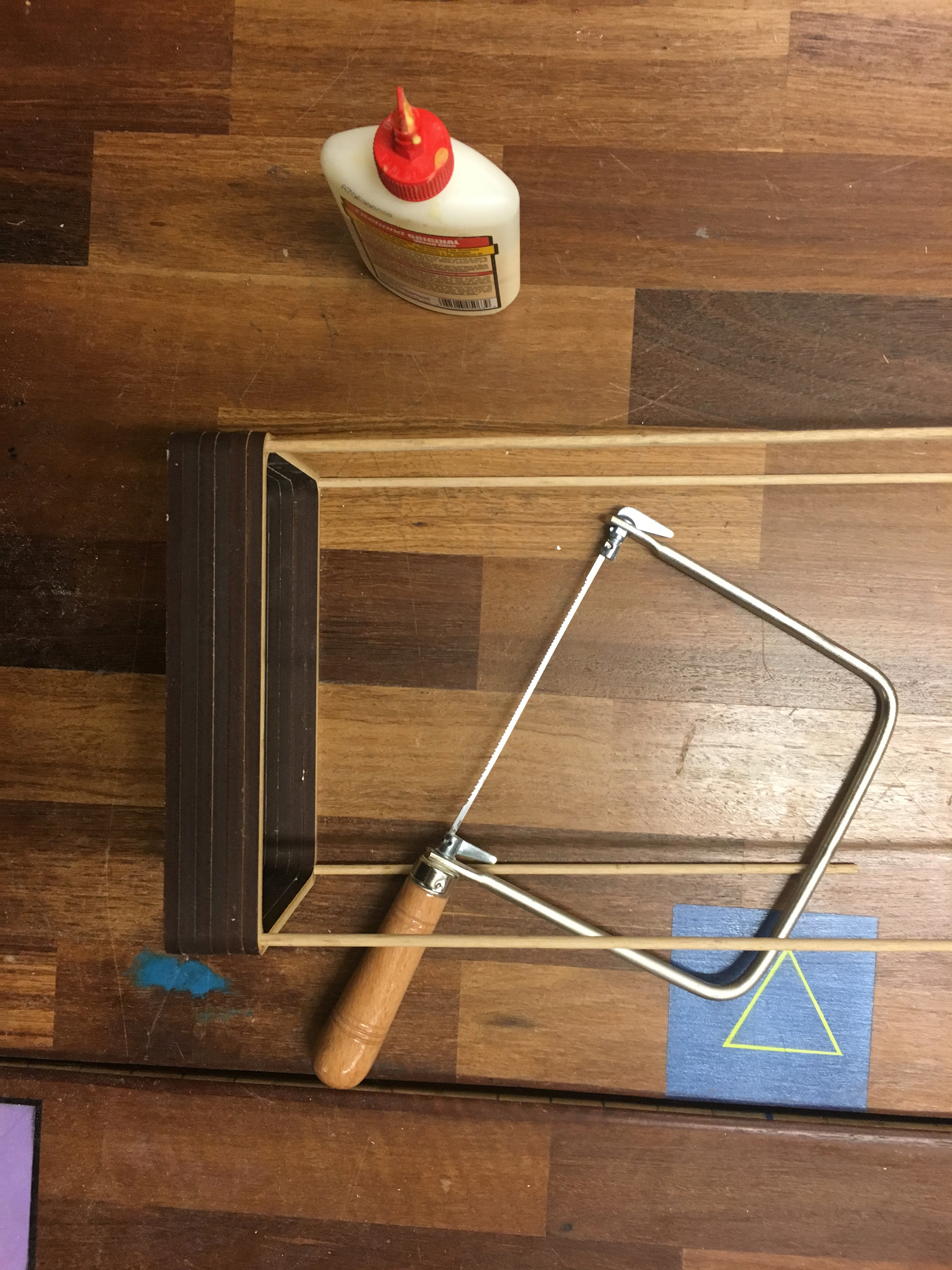

Cardboard prototype

Assembling

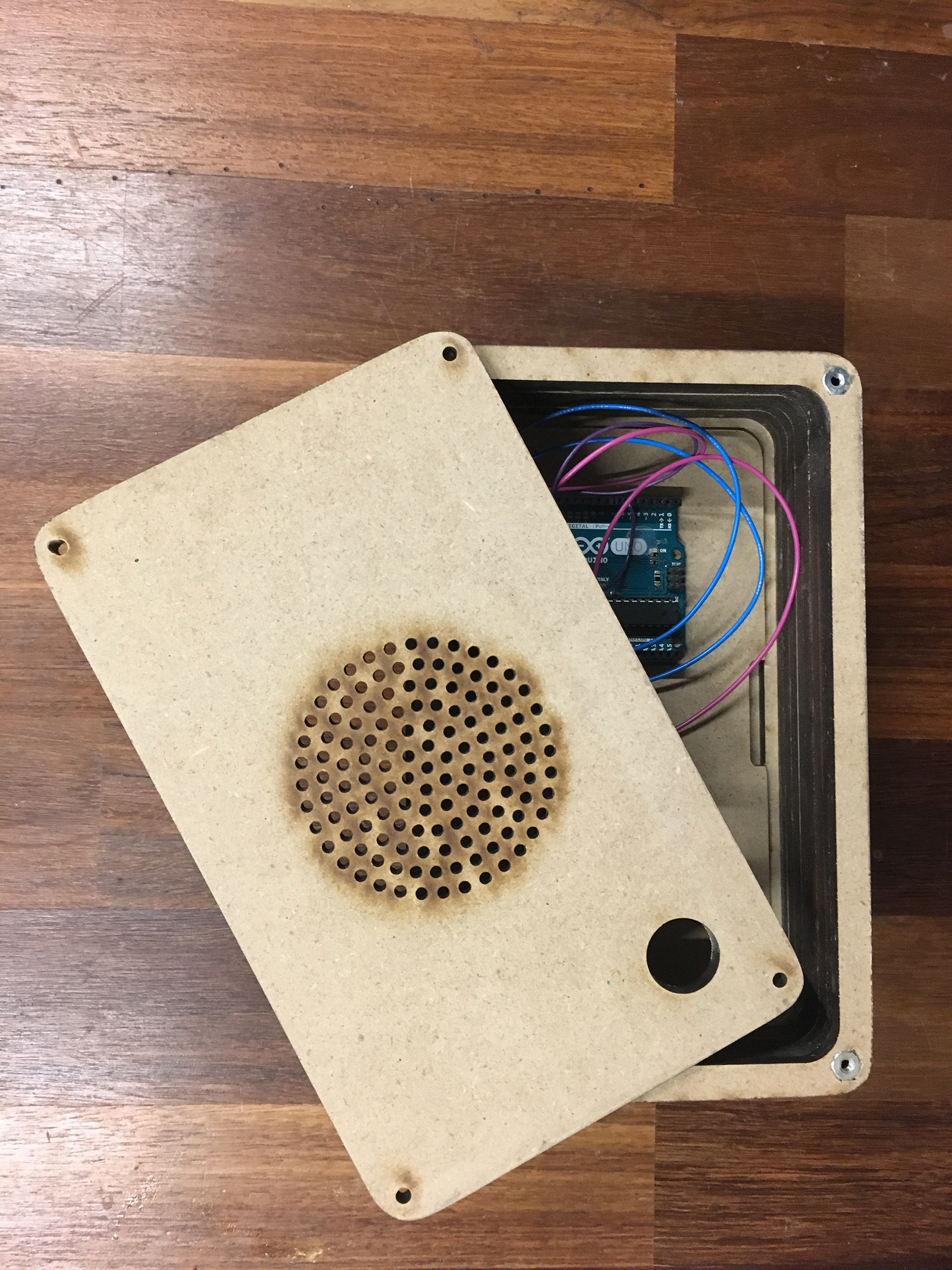

Built enclosure

Internal panel

Spray painting

Adding grips to bottom

Assembling front

Adding electronics

Attaching back

Assembled (Off State)

Assembled (On State)

Coding Process

Every second, there are approximately 6000 new posts on Twitter. Since I use real-time data, I narrowed down the scope of input data to better accommodate the processing power of my computer. Using the tweepy library in Python, my code reads in real time tweets from Twitter while only searching for #twitterradio. Once a tweet with that hashtag is found, my code runs sentiment analysis on the tweet via IBM’s AI (Artificial Intelligence) Watson. To access Watson, I utilize the watson_developer_cloud library in Python. Watson then returns one of six possible emotions: joy, anger, disgust, sadness, fear, or undefined. (Watson returns undefined if there is not enough data to pull from the tweet to determine the emotion.)

Once an emotion is found, my code sends the data – the emotion – from Python to Arduino using serial communication. In order to communicate between these two platforms, I utilize the pyserial library in Python. Then, I use the playsound library in Python to output audio. Since the audio plays locally on my computer, I connected my computer via Bluetooth to a speaker hidden inside the radio enclosure. This way, the audio appears as though it is coming from the radio itself. Finally, once the song finishes, my code sends a new signal to Arduino to tell the radio to return to its waiting state (rainbow).

On the Arduino side, I again utilize serial communication to look for data being sent from Python. When there is no emotion to display – TwitterRadio stays in its waiting state – the radio outputs the rainbowCycle from the strandtest library for Adafruit’s neopixels. Once an emotion is received, the neopixels light up to the color of the emotion: joy is yellow, anger is red, disgust is green, sadness is blue, fear is purple, or undefined is rainbow. To see the full implementation of the code, please visit my GitHub.